ChatGPT O3 and the new ceiling of machine intelligence

- marketing

- Categories: Isidoros' blogposts

- Tags: AI, CX, Loyalty

Prefer listening? I transformed this article into a podcast using Google’s NotebookLM. It’s surprisingly accurate and even expands on some of the ideas. Give it a listen!

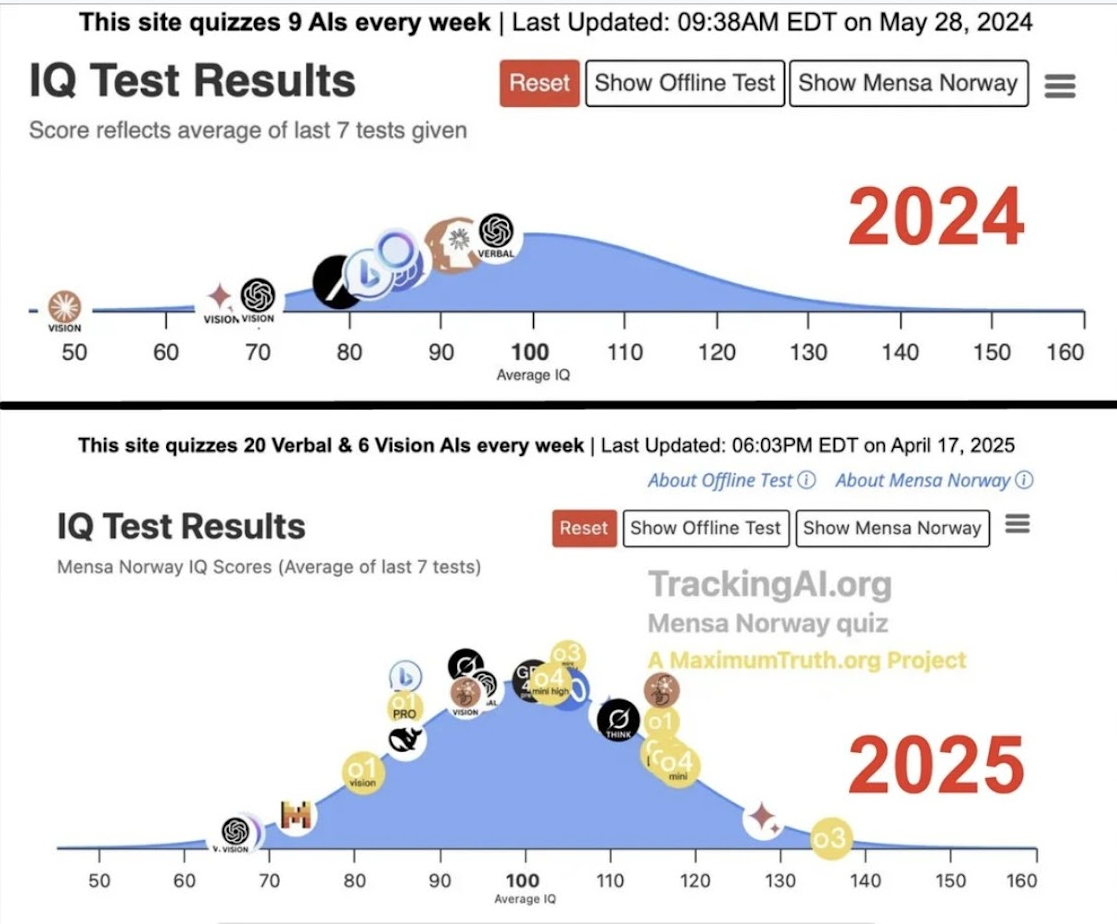

From May 2024 to today, the top AI score on Mensa’s IQ quiz jumped from 96 to 136—higher than 98% of humans. The star of the show is OpenAI’s brand-new O3, the first system to keep a 136 IQ average. Not to be outdone, Google’s Gemini 2.5 Pro now tops key reasoning tests like GPQA and AIME 2025 showing that the ceiling keeps rising. Raw numbers never tell the whole story, but they prove one thing: AI brain-power is skyrocketing!

Why this matters

Einstein’s IQ is pegged at about 175—at the current pace, AI could reach that mark in roughly a year.

What will it mean for human civilization to be surrounded by entities smarter than Einstein that cost from zero to twenty Euros per month?

For companies, the real value isn’t the score; it’s how this brain-power solves everyday problems. The biggest game-changer I’ve seen is O3’s ability to use external tools mid-thought.

- It spots when a calculator, database, or Python script is needed.

- It writes the code by itself.

- It runs that code while still “thinking.”

- It reads the results and keeps going, tightening the loop between question and answer. 😮

“Is O3 really better than O1?”—Why the answer isn’t obvious

Benchmarks say O3 is far ahead, but in everyday use that gap can vanish. To spot the difference, I have to quiz each model in my own specialty. When I leave that comfort zone, even in fields I half understand, it feels like judging whether Einstein or Feynman is smarter just by reading their answers in particle physics. Beyond a tight expert test, the edges blur.

Most of us judge an AI the same way we judge a person we just met: by how it feels to talk to. If the bot uses a warm tone, drops a witty joke, or writes crisp, magazine-style prose, we think, “Wow, this one’s brilliant.” But a pleasant voice is not the same as a sharper mind.

Under the hood, two models can reach very different logical answers—yet that difference is hidden unless you already know the subject cold or you run a formal benchmark. Most users don’t do either. Instead they follow quick-and-easy signals:

Did it understand my messy question? Did it respond in my preferred style? Did it agree with me? All those cues shape trust far more than the actual quality of the reasoning.

That’s why a weaker model that flatters you, or mirrors your writing style, can feel smarter than a stronger one that is a bit stiff or blunt. It’s the same “halo effect” we fall for in human conversation, now transferred to chatbots. Unless we bring expert-level tests or deep domain knowledge to the table, we simply can’t spot which lightbulb is brighter—we just notice which one makes the room feel nicer.

To put it simply:

We’re not smart enough to notice the extra smartness of new AI models.

“Is this AGI?” and the “we don’t know what to ask” problem

O3 aces the ARC-AGI test, solves Olympiad-level math, and can handle PhD exams in dozens of fields. On paper that sounds like “job done” regarding Artificial General Intelligence (AGI). Yet the model still waits for us to tell it what to do. It doesn’t pick its own goals, launch side projects, or run your company while you sleep. In practice it’s a super-smart copilot, not a self-driving brain. If you hand it only easy chores, you’ll never notice the gulf between human and machine IQ.

That puts the spotlight on us, not the model, at least for now. Imagine having fifty Einsteins on your team 24/7 and still asking them to proofread emails. Until we learn to set bolder challenges like “Design a carbon-negative cement that costs under €50 a ton”, “Draft a plan to eradicate malaria in five years on a €10 million budget”, true AGI could stroll in and most people wouldn’t even blink.

In short, the frontier models are already mighty. The missing piece is our ability to craft the right questions. If we don’t stretch the system, we’ll never see how far it can really go!

O3’s real breakthrough: one brain, thousands of PhDs

Forget the headline IQ. The jaw-dropping part is that one model now shows doctorate-level skill in almost every field you can name—history, quantum physics, copyright law, full-stack coding, you name it. In the past, true polymaths were rare.

Today the same “mind” can spin up a Shakespearean sonnet, debug a Kubernetes cluster, and draft a watertight licensing agreement without breaking stride. Nothing in human history looks like this!

Now picture what happens when that brain can walk through our workplace tools on its own—clicking through contracts, scrolling Teams chats, or updating Jira tickets without any special plug-ins. Adoption won’t creep along the way electricity or smartphones did; it will jump.

There’s a delicious irony here: for decades we built user interfaces so humans could talk to machines. Suddenly the machines are smart enough to read our interfaces, but those very menus and dashboards slow them down—like a cheetah stuck in traffic! The moment AIs understand most of the “human stuff” on the screen and can also whisper straight to other AIs under the hood, we may hit the tipping point futurists call the Singularity.

In short, O-3 isn’t just a higher score—it’s the first glimpse of unlimited expertise living in a single, scalable mind ready to plug into every corner of work and life.

Getting ready for a workplace filled with “infinite polymaths”

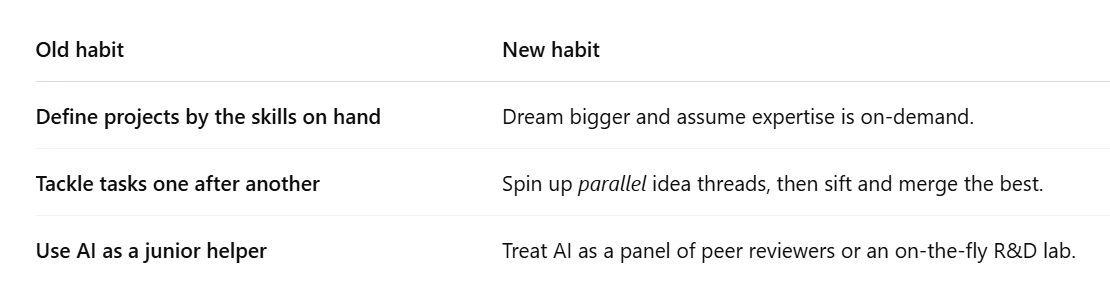

For most of history, the limit on any project was the brain-power in the room. If your team didn’t have a top-tier statistician, aerospace engineer, or medieval-Latin expert, you simply stayed within reach of what you knew. Those ceilings just disappeared. With models like O3 on call, you can rent world-class expertise the way you rent cloud storage—instantly and at scale. That means the old rules for planning, brainstorming, and quality control all need a refresh.

Here’s how to shift your habits:

The bottom line: “Good enough for humans” is no longer the bar. Aim for questions—and answers—that only an army of polymaths could handle, because that army is now just a prompt away.

Take‑aways

After a year of breathtaking progress, we now live in a world where a single model can out-reason most people, master doctoral topics across every discipline, and plug straight into the tools that run our companies. Yet what really matters is how we rise to meet it. Unless we learn to ask bigger, bolder questions—and let these “infinite polymaths” roam freely through our workflows—the step from clever assistant to transformative partner will remain invisible. The future is already here, waiting for the right prompt!